Johns Hopkins computer scientists have created an artificial intelligence system capable of “imagining” its surroundings without having to physically explore them, bringing AI closer to humanlike reasoning.

The new system—called Generative World Explorer, or GenEx—needs only a single still image to conjure an entire world, giving it a significant advantage over previous systems that required a robot or agent to physically move through a scene to map the surrounding environment, which can be costly, unsafe, and time-consuming. The team’s results appear on the open-access archive arXiv.org.

“Say you’re in an area you’ve never been before—as a human, you use environmental cues, past experiences, and your knowledge of the world to imagine what might be around the corner,” says senior author Alan Yuille, the Bloomberg Distinguished Professor of Computational Cognitive Science at Johns Hopkins. “GenEx ‘imagines’ and reasons about its environment the way humans do, making educated decisions about what steps it should take next without having to physically check its environment first.”

GenEx uses sophisticated world knowledge to generate multiple possibilities of what might exist beyond the visible image, assigning different probabilities to each scenario rather than making a single definitive guess. This ability to mentally map surroundings from limited visual data is crucial for many real-world applications, including in scenarios such as disaster response. For instance, rescue teams could use a single surveillance image to help explore hazardous sites from afar without risk to humans or valuable equipment.

“This technology can also improve navigation apps, assist in training autonomous robots, and power immersive gaming and VR experiences,” says lead author Jieneng Chen, a PhD student in computer science.

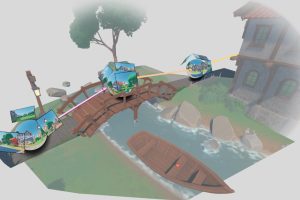

From a single image, GenEx generates a realistic, synthetic virtual world where AI agents can navigate and make decisions through reasoning and planning. The agent needs only a view of its current scene, a direction of movement, and the distance to traverse. As demonstrated in the animation below, the agent can move forward, change direction, and explore its environment with unlimited flexibility.

Digital rendering of AI navigating a synthetic virtual world. Image courtesy of the Whiting School of Engineering.

And unlike the dreamlike AI world exploration apps now gaining popularity—such as Oasis, an AI-generated Minecraft simulator—GenEx’s environments are consistent. This is because the model was trained on large-scale data with a technique called “spherical consistency learning,” which ensures that its predictions of new environments fit within a panoramic sphere.

“We measure this by having GenEx navigate a randomly sampled closed path, returning to the origin in a fixed loop,” Chen says. “Our goal was to make the start and end views identical, thus ensuring consistency in GenEx’s world modeling.”

While this consistency isn’t unique to GenEx, the research team says it is the first and only generative world explorer to empower AI agents to make logical decisions based on new observations about the world they’re exploring in a process the computer scientists call “imagination-augmented policy.”

For example, say you are driving and the light ahead is green, but you notice that the taxi in front of you has come to an abrupt, unexpected stop. Getting out of your car to investigate would be unsafe, but by imagining the scene from the taxi driver’s perspective, you can come up with a possible reason for their sudden stop: maybe an emergency vehicle is approaching—and you should make way, too.

Rendering of an AI model making an observation-based decision. Image courtesy of Whiting School of Engineering.

“While humans can use other cues like sirens to identify this kind of situation, current AI models developed for autonomous driving and other similar tasks only have access to image and language inputs, making imaginative exploration necessary in the absence of other multimodal information,” Chen says.

The Hopkins team evaluated the consistency and quality of GenEx’s output against standard video generation benchmarks. The researchers also conducted experiments with human users to determine if and how GenEx could augment their logic and planning abilities and found that users made more accurate and informed decisions when they had access to the model’s exploration capabilities.

“Our experimental results demonstrate that GenEx can generate high-quality, consistent observations during an extended exploration of a large virtual physical world,” Chen says. “Additionally, beliefs updated with the generated observations can inform an existing decision-making model, such as a large language model agent, and even human users to make better plans.”

Joined by Tianmin Shu and Daniel Khashabi—both assistant professors of computer science—and undergraduate student TaiMing Lu, Yuille and Chen will incorporate real-world sensor data and dynamic scenes for more realistic, immersive planning scenarios. Bloomberg Distinguished Professor of Computer Vision and Artificial Intelligence Rama Chellappa and Cheng Peng, an assistant research professor in the Mathematical Institute for Data Science, will help curate the real-world sensor data.

The cross-disciplinary project, which involves computer vision, natural language processing, and cognitive science, marks a significant achievement toward achieving humanlike intelligence in embodied AI, Yuille says.

Learn more about their work and explore an interactive demo here.