The human brain is an absolute marvel of computing power. Estimates suggest the 3 pounds of tofu-like tissue in our skulls can perform roughly 1 quintillion calculations per second—a feat only recently matched by the world’s top supercomputers.

Yet that statistic, albeit impressive, fails to convey just how far the brain’s remarkable capabilities go beyond those of machines. Consider our sense of vision. About half the neurons in our cerebral cortexes—the big, lobed, wrinkly outer layer of our brains—play a role in our visual system. That system near instantly handles visual tasks we humans find utterly mundane, but that can flummox artificial intelligences. For example, a common cybersecurity tactic involves identifying which images in a set have, say, a bus or a bicycle in them—an easy “challenge” for humans that stumps online bots.

Although computer vision is not up to human snuff just yet, scientists have nevertheless made astonishing advancements since its inception in the 1960s. Nowadays, facial recognition apps on new smartphones have become de rigueur. Self-driving cars are on the cusp of wider adoption, having logged tens of millions of miles on public roads and compiling a safety record that some say surpasses that of human drivers. The medical profession is likewise getting closer to broadly adopting computer vision for clinic use to assist with detecting tumors and other abnormalities.

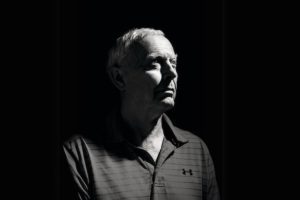

Over the last four decades, Johns Hopkins’ Alan Yuille has made a significant impact on the overall development of computer vision and is also continuing to advance the field toward more humanlike abilities. Yuille has drawn inspiration from the human visual system—refined in our species over millions of years of evolution—for key insights into advancing computer vision capabilities.

“I want algorithms that will work in the real world and that will perform at the level of humans, probably ultimately better. And to do that, I think we need to get inspired by the brain,” says Yuille, Bloomberg Distinguished Professor Computational Cognitive Science at the Whiting School of Engineering and Krieger School of Arts and Sciences.

Yuille’s career has spanned multiple institutions and eras in computer vision, from the early conceptual days through the revolution of machine learning, where AI algorithms devour massive datasets of imagery and learn like humans. His contributions are tightly interwoven into the fabric of the field. Examples include semantic segmentation—where computers distinguish classes of objects and backgrounds at the level of individual pixels—to compositionality, where the whole of an object can be represented by the aggregate of its parts.

“What is so fascinating is that when you go to conferences, even the new young superstars know about Alan and are inspired by him,” says Adam Kortylewski, a former postdoctoral researcher in Yuille’s lab at Johns Hopkins and currently a group leader at the University of Freiburg and the Max Planck Institute for Informatics.

Kortylewski credits Yuille’s career success to his enduring perspectives on vision and openness to innovation.

“Alan is convinced of certain ideas, but he’s also flexible enough to then adapt to new insights and new technology. I think he’s one of the very few figures who has this kind of capability,” Kortylewski says. “So even today, the research and new papers coming from his lab are very well-cited and are pioneering work that other people catch up with.”

“ADVENTUROUS” AI

Yuille’s path to collectively studying natural cognition, artificial intelligence, and computer vision was far from linear. He grew up in North London in an area known as Highgate, bordering a cemetery. (“Karl Marx was buried over behind our backyard wall,” Yuille relates.) His parents, from Australia originally, were into the liberal arts—his mother in English literature, his father in architecture. Yuille remembers there being thousands of books about visual art in his home. Along with frequent trips to art museums, he thinks the exposure must have made an impression, despite his lackluster interest. “I think at the time, I rather reacted against it,” Yuille jokes.

What did rivet Yuille, though, was sports, and as an adult, Yuille continues to find active outlets. “I hike up mountains, I ski down mountains. I’ve done hang gliding. I’ve flown a small airplane,” he says. He chalks up his adventure-seeking to “too many Bond films when I was a boy.”

Stephen Hawking (foreground right) meets with a group of his doctoral students at Cambridge University during the late 1970s. Alan Yuille is shown seated two seats to Hawking’s right. [Photo courtesy of Alan Yuille]

“Stephen didn’t take things too seriously,” he says, recounting one afternoon when Hawking, having already developed severe symptoms from amyotrophic lateral sclerosis, had Yuille handle the computer interface for a Dungeons and Dragons game that Hawking wanted to play.

However, Yuille’s research—involving the fiendishly still-unresolved quandary of quantum gravity—foundered, leaving him looking for something new but similarly profound. “I thought, ‘Well, AI is sort of something as fundamental as understanding the basic physical laws of the universe.’ Because AI is really understanding human intelligence, and that’s equally fundamental,” says Yuille.

Compared to physics, the field of AI was also attractively far less developed, not to mention more practical.

“I liked the fact that AI would have real-world consequences,” says Yuille. “So that got me into leaving physics and doing AI—because it was new, it was somewhat adventurous.”

VISUAL RUDIMENTS

VISUAL RUDIMENTS

Pursuing this newly embraced academic space, Yuille joined the Artificial Intelligence Laboratory at MIT in the early 1980s. Perhaps hearkening back to his childhood exposure to the visual arts, he focused his research on computer vision—a fledgling field begun at MIT barely 15 years prior.

“In the 1980s, it was almost like the Wild West,” says Yuille. “We had to start making sense of the whole enormous complexity of vision.”

“In the 1980s, it was almost like the Wild West,” says Yuille. “We had to start making sense of the whole enormous complexity of vision.”

Phenomenologically, vision involves particles of light, called photons, being emitted or reflected by matter and then striking the retinal cells at the backs of our eyeballs. Generated nerve impulses subsequently travel to our brain’s visual cortex. There, vast networks of neurons process the streamed-in information and construct our richly perceived world of color, shape, textures, and distances.

Seeking a way into this morass, Yuille teamed up with Tomaso Poggio, who had also just joined the faculty at MIT. “We hit it off almost immediately and started working together, maybe because both of us were physicists originally and not computer scientists,” recalls Poggio, who stayed on at MIT and is now the Eugene McDermott Professor in the institute’s Department of Brain and Cognitive Sciences.

In focusing on intrinsic elements of human visual perception and cognition, Yuille and “Tommy,” as he affectionately calls Poggio, studied how computers might perceive the basic lines and edges of objects to make broad inferences about the contents of a visual field. “Finding reliable contours of objects and edges in images at the time was one of the early problems in computer vision,” says Poggio.

During this early phase, much of the work was conceptual and could not be deeply demonstrated, owing to the lack of computing power and massive datasets that would later transform the field. Yet being restrained in this way meant that Yuille and his colleagues had to proceed from a more theoretical basis, getting at the conceptual underpinnings of vision rather than worrying about go-to-market applications. “I think a lot of the ideas we had were actually pretty good,” says Yuille, “but we didn’t have the models that could take advantage of the big data that we now have.”

MENTAL MODELS FOR VISION

MENTAL MODELS FOR VISION

Building on his research progress in computer vision, Yuille moved to Harvard University in the mid ’80s and became a professor. From there, in 1995, he went to the Smith-Kettlewell Eye Research Institute, a nonprofit research organization in San Francisco. Seven years later, he returned to academia at UCLA as a professor with joint appointments in statistics, computer science, psychiatry, and psychology, an  indication of how his research had become highly multidisciplinary.

indication of how his research had become highly multidisciplinary.

Some of the areas where Yuille broke ground along the way include the aforementioned semantic segmentation, which is a core task for self-driving cars as they find where the road is, where boundaries are, and discern other cars. Another related area where Yuille introduced key ideas—also tying into the combining-parts-to-make-the-whole concepts of compositionality—is termed “analysis by synthesis.”

The essential notion of analysis by synthesis is that, through experience of the world, humans build up a vast mental library of possible objects. During moment-to-moment vision, we constantly and rapidly form hypotheses about the identity of the objects we are likely seeing. Based on that mental library, we then cognitively synthesize the hypothesized object, forming a manipulable, virtual version of it. As the milliseconds pass, we continue comparing the observed object to the synthesized object, filling in details and ultimately determining what the object is.

“It’s like you’re saying, ‘Okay, we’re pretty sure this is a face at this angle, now let’s check and get the details correct exactly about whose face it is, where the eyes are if they’re looking at you’—things like that,” says Yuille.

APPREHENDING CANCER

On account of Yuille’s reputation, based on these and other breakthroughs in computer vision, he was recruited by Johns Hopkins in 2016 to be a Bloomberg Distinguished Professor. A $350 million endowment from Michael R. Bloomberg, Engr ’64 established these influential positions at the university in 2013 to foster innovatively interdisciplinary collaborations aimed at tackling particularly complex problems.

“I was attracted to Hopkins because of the idea of trying to relate the study of biology or cognitive science to the study of AI,” says Yuille.

A fruit of this labor has been Yuille’s trailblazing research into using computer vision to detect tumors in CT scans. A project dedicated to this approach for pancreatic cancer, one of the deadliest malignancies, is known as FELIX, named after the magical potion Felix Felicis, or “Liquid Luck,” from the Harry Potter series.

The FELIX project has brought together Yuille’s expertise in computer vision and deep neural networks—“deep nets,” in the jargon—with that of Elliot Fishman, a professor of radiology and oncology at the Johns Hopkins University School of Medicine. Clinicians have sought to boost rates of detecting pancreatic cancer early, when a cure is still possible. Human radiologists fail to detect tumors in about 40% of CT scans of patients whose tumors are still small (less than 2 centimeters) and surgically removable. The hope is that machine learning algorithms can act as a second pair of expert eyes to review scans and alert radiologists to tumors that they might have otherwise missed.

“The goal is to bring this technology to the clinic so that one day, whenever anyone gets a CT scan, they will have the help of this AI friend,” says Bert Vogelstein, Med ’74 (MD), the Clayton Professor of Oncology at the School of Medicine and a participant in the FELIX project.

Yuille recognized that semantic segmentation and other computer vision techniques could accelerate the project, along with efforts to simulate rare, early tumors for more effective training of the algorithms. “Alan has tremendous insights into the underlying principles of deep networks and other machine learning algorithms,” says Vogelstein.

The FELIX project overall has achieved excellent performance, closely matching human skill at detecting larger tumors, while also revealing dangerously tiny ones. In total, about one-quarter of the pancreatic cancers FELIX has picked up had not been previously diagnosed, illustrating the approach’s immense promise.

Ongoing work continues to validate FELIX for innovative clinical use and extend its value to detecting cancer in other abdominal organs. “These forms of automatic diagnosis could obviously be huge,” says Yuille.

THE FUTURE OF COMPUTER VISION

As he has expanded the frontier of what’s possible in computer vision over the decades, Yuille has also educated hundreds of students, many of whom have gone on to build upon their former teacher’s results.

“Alan is a great mentor,” says computer vision researcher Cihang Xie, who first took a machine learning course of Yuille’s during his master’s degree studies at UCLA, then later joined Yuille’s lab at Johns Hopkins to obtain his PhD in 2020. He is now an assistant professor of computer science and engineering at the University of California, Santa Cruz.

“Alan is quite open to new directions and he respects your ideas,” Xie adds. “He is always very patient in completely understanding what you’re trying to do and help you in building a more mature research case, even when it’s something he also needs to learn.”

Xie worked on compositional models with Yuille, among other topics, and has seen how combining them with deep nets is growing the technology. “Alan started these deep compositional models that are more powerful and pretty robust to [object] occlusion or other harder scenarios that cannot be solved by regular deep learning models,” says Xie.

The advancements keep on coming. In work presented at an international conference in July 2024, for instance, Xie, Yuille, and their students demonstrated an enhanced version of image-GPT, a visual equivalent to the celebrated chatbot ChatGPT. Just as these large language models can predict the next words in a sentence to form (usually) coherent statements, image-GPT predicts next pixels, generating images, for instance, of people, animals, cars, buildings, and so on. By incorporating more contextually semantic predictive approaches, the researchers achieved 90% classification accuracy on a benchmark dataset—the best performance shown in academia and on par with big tech companies such as Google and Meta. “We constructed a state-of-the-art image recognition model, and we’re pretty excited about it,” says Xie.

“THE HUMAN ELEMENT”

Amid successes in the dramatic rise of computer vision, Yuille emphasizes that for the field to evolve further, it must embrace the human visuocognitive experience of lived-in environments. “Human vision is developed by interacting with the world as infants; I see it with my son,” Yuille explains. Rather than just looking at tons of images, “you explore, you touch objects with your hand, you build up a knowledge model. I think if we want to take computer vision algorithms up to the next level,” Yuille concludes, “we’ll need to get that human element back in.”