Multiview Neural Surface Reconstruction by Disentangling Geometry and Appearance#

Authors: Lior Yariv, Yoni Kasten, Dror Moran, Meirav Galun, Matan Atzmon, Ronen Basri, Yaron Lipman

Affiliations: Weizmann Institute of Science

NeurIPS 2020

Links: arXiv, Project Page, Code

Summary#

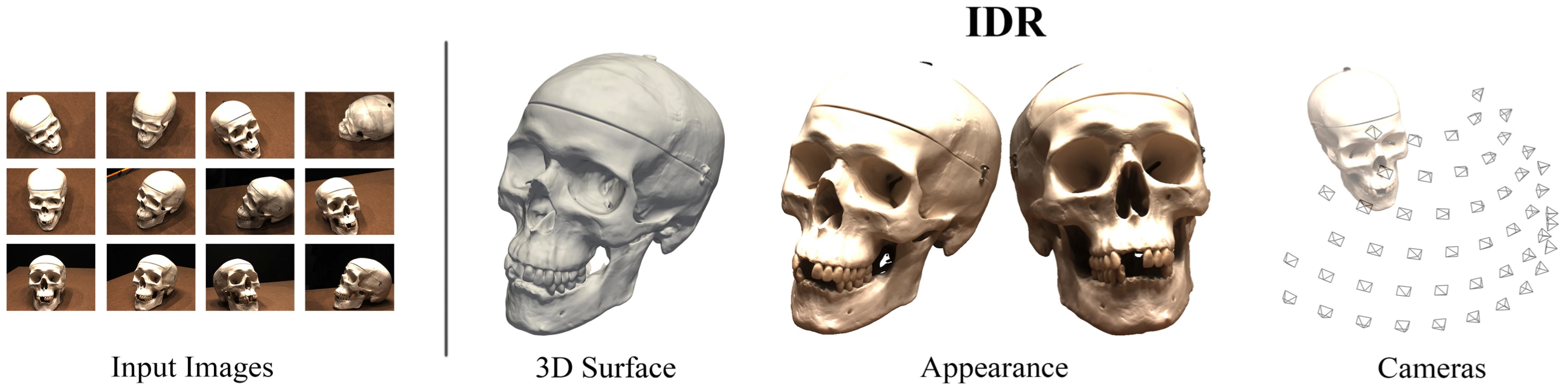

In this work the authors introduce a neural network architecture that simultaneously learns the unknown geometry, camera parameters, and a neural renderer that approximates the light reflected from the surface towards the camera. By training the network on real world 2D images of objects with different material properties, lighting conditions, and noisy camera initializations from the DTU MVS dataset, the model can produce state-of-the-art 3D surface reconstructions with high fidelity, resolution, and detail.

Key Ideas#

The goal is to reconstruct the geometry of an object from masked 2D images with possibly rough or noisy camera information. There are three unknowns:

geometry

appearance

cameras

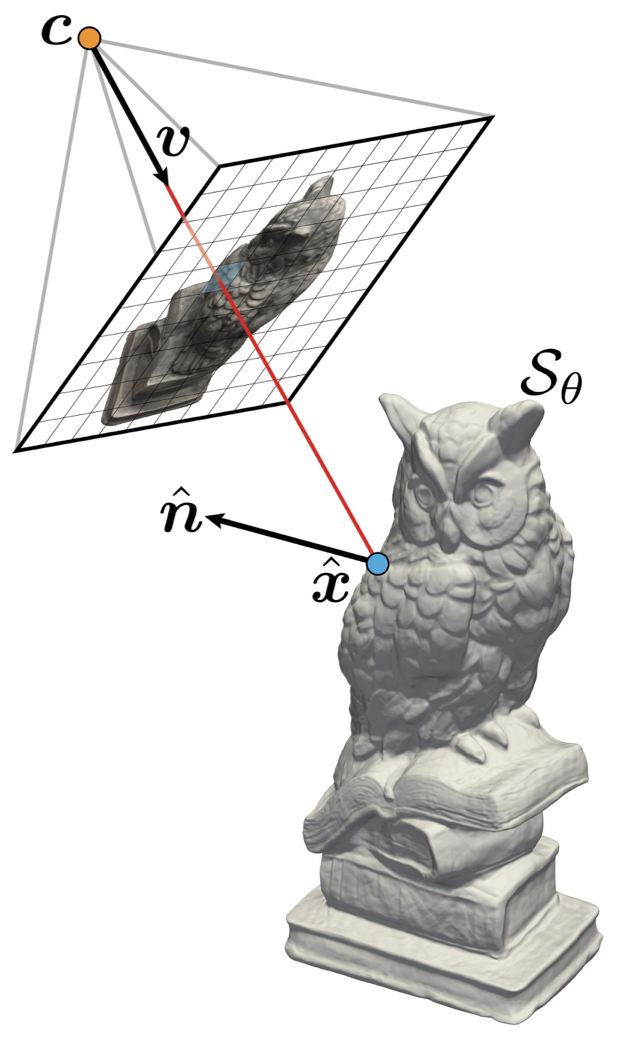

The geometry is represented as the zero level set of an MLP

IDR forward model. Let the pixel be

Approximation of the surface light field. The surface light field radiance

The BRDF function

The light sources are described by a function

The overall rendering equation is given by

where

Masked rendering. Consider the indicator function identifying whether a certain pixel is occupied by the rendered object

which approximates

Loss. The loss is given by

where

Technical Details#

Notes#

References#

[1] A. Gropp, L. Yariv, N. Haim, M. Atzmon, Y. Lipman. Implicit geometric regularization for learning shape. In arXiv, 2020.