Inflection MTM 2014

In brief. The traditional formulations of the main problems in machine translation, from alignment to model extraction to decoding and through evaluation, almost entirely ignore the linguistic phenomenon of morphology, instead treating all words as distinct atoms. This misses out on a number of generalizations; for example, in alignment, it could be useful to accumulate evidence across the various inflections of a verb such as walk, since walk, walked, walks, and walking all likely have related and overlapping translations. In addition to such problems where data is artificially made more sparse, the failure to properly reason about the morphological properties of the target language creates problems for the language model, which may not have seen the proper inflected form even while having seen other variants of the word. In this lab, we will consider morphology in a very narrow setting: given a sequence of Czech lemmas (base word forms) in the correct order, and a set of possible inflections for each, can you predict the correct inflected sequence?

Further background. Morphology is broadly placed into two categories: inflectional morphology studies how words change to reflect grammatical properties, roles, and other information, while derivational morphology describes how words change as they are adapted to different parts of speech. Of these, inflectional morphology is the more important modeling omission in natural language generation tasks like machine translation, because choosing the right form of a word is necessary to produce grammatical output.

The inflectional morphology of English is simple. It is mostly limited to verbs and pronouns, which reflect only a subset of person, number, tense, and one of two cases. Because of this, it is possible to do a good job translating into English without bothering with morphology (an auspicious fact for the development of field).

However, this is not the case for many of the world’s languages. Languages such as Russian, Turkish, and Finnish have complex case systems that can produce hundreds of surface variations of a single lemma. The vast number of potential word forms creates data sparsity, an issue that is exacerbated by the fact that morphologically complex languages are often the ones without much in the way of parallel data.

In this assignment, you will earn an appreciation for the difficulties posed by morphology. The setting is simple: you are presented with a sequence of Czech lemmas, and your task is to choose the correct inflected form for each of them. The following tables are examples: the first column is the sequence of lemmas, and the second column is the set of possible inflections for the lemma. The third column provides a English gloss.

| lemma | inflections | gloss |

|---|---|---|

| přednost | (3) přednost, předností, přednosti | advantages |

| manažerský | (6) manažerské, manažerských, manažerský, manažerským, manažerském, manažerského | of the management |

| kontrakt | (5) kontrakt, kontraktů, kontraktu, kontrakty, kontraktech | contract |

| lemma | inflections | gloss |

|---|---|---|

| teprve | (1) teprve | only, finally |

| takhle | (1) takhle | like this, this way |

| být | (43) je, by, jsou, bude, byl, být, není, jsme, bylo, byla, jsem, budou, byly, byli, nejsou, nebude, jste, bychom, bych, nebyl, nebylo, budeme, nebyla, nebudou, nebyly, byste, budu, nejsem, nejsme, jest, nebyli, budete, nebudeme, nebudu, budiž, nebýt, nejste, buďte, nebudete, býti, budeš, jsi, nebudeš | we are |

| světový | (12) světové, světových, světového, světový, světová, světovém, světovou, světovým, světovému, světovými, světoví, nejsvětovějšího | comparable to other countries |

To support you in this task, you are provided with a parallel training corpus containing sentence pairs in both reduced and inflected forms, and a default solution chooses the most probable form for each lemma.

Getting Started

Start by cloning the assignment repo:

git clone https://github.com/mjpost/inflect

This contains all the code for the assignment, which you will build on.

Change to the inflect directory that was just created, and download

the data for the assignment:

cd inflect

wget -q http://cs.jhu.edu/~post/files/mtm2014-inflect.tgz

tar xzf mtm2014-inflect.tgz

You will then find three sets of parallel files under data/, with

the following prefixes:

-

train: training data (for building models) -

dtest: development test data (for testing your model), and -

test: held-out test data (for submitting to the leaderboard).

Sentences are parallel at the line level, and the words on each line also correspond exactly across files. The parallel files have the following suffixes, which denote the type of information they contain:

-

*.lemmacontains the lemmatized version of the data. Each lemma can be inflected to one or more fully inflected forms (that may or may not share the same surface form). -

*.tagcontains a two-character sequence denoting each word’s part of speech. This file is word-for-word parallel with the lemmas. -

*.treecontains dependency trees, which organize the words into a tree with words generating their arguments. The tree format is described below, and code is provided to read it. -

*.formcontains the fully inflected form. Note that we providedev.formto you so that you can test your ideas, but you of course should not look at it or build models over it.test.formis kept hidden.

You should use the development data (dtest) to test your approaches

(make sure you don’t use the answers except in the grader). When you

have some thing that works, run it on the test data (etest.lemma)

and submit that output to the leaderboard. The scripts/ subdirectory

contains a number of scripts, including a grader and a default

implementation that simply chooses the most likely inflection for each

word:

# Baseline: no inflection

cat data/dtest.lemma | ./scripts/grade

# Choose the most likely inflection

cat data/dtest.lemma | ./scripts/inflect | ./scripts/grade

The scripts/inflect script uses the training data (which is

hard-coded) to count the number of forms that appear with each

lemma. It then inflects each lemma independently, without reference to

neighboring values or inflections.

The evaluation method is accuracy: what percentage of the correct inflections did you choose?

The Challenge

Your challenge is to improve the accuracy of the inflector as much as possible. The provided implementation simply chooses the most frequent inflection computed from the lemma alone (with statistics gathered from the training data).

There are plenty of ways to improve the default unigram model. You can think of the problem as a translation problem, for example (but without reordering). Another way to think about it is as an HMM, where the hidden states are the sets of inflections. We have provided plenty more information to you that should permit much subtler approaches. Here are some suggestions:

- Consider altering the forms of the lemmas — some of them have extra

information listed after the

^character, e.g.,rychle_^(*1ý). - Condition the inflection on one or more previous inflections (HMM).

- Incorporate part-of-speech tags (see section below).

- Implement a bigram language model over inflected forms.

- Implement a longer n-gram model and a custom backoff structure that consider shorter contexts, POS tags, the lemma, etc.

- Model long-distance agreement by incorporating the labeled dependency structure. For example, you could build a bigram language model that decomposes over the dependency tree, instead of the immediate n-gram history.

- Implement multiple approaches and take a vote on each word.

Obviously, you should feel free to pursue other ideas. Morphology for machine translation is an understudied problem, so it’s possible you could come up with an idea that people have not tried before!

Submitting to the Leaderboard

Please follow these steps to submit your output on both the dev

and test sets (dtest.form and etest.form) to the leaderboard,

which will allow you to see your score and will also let you see how

others are doing. During the lab, the development set values will

show; when the lab is done, the test set results will be displayed.

-

Visit the course submission page. Enter any identifier you wish to get in. Please don’t login as an administrator :).

-

To add yourself to the leaderboard, check the box, and choose a handle or name to be identified by.

-

Your submission should include both the development and test data. You can

catboth files and pipe them through your script (make sure dtest is first). For example:cat data/{dtest,etest}.lemma | ./scripts/inflect > submission.txt -

Then use the file submission dialog to upload your submissions.

Click here to see the current leaderboard.

Using POS tags and dependency trees

The .pos and .tree files contain parts of speech and

dependency trees for each sentence. Information about the

part-of-speech tags

can be found here.

Dependency trees are represented as follows. The tokens on each line correspond to the words they share an index with, and contain two pieces of information, depicted as PARENT/LABEL. PARENT is the index of the word’s parent word, and LABEL is the label of the edge implicit between those indices. Parent index 0 represents the root of the tree. Each child selects its parent, but the edge direction is from parent to child.

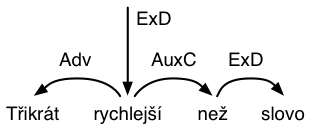

For example, consider the following lines, from the lemma, POS, tree, and word files (plus an English gloss), respectively:

třikrát`3 rychlý než-2 slovo

Cv AA J, NN

2/Adv 0/ExD 2/AuxC 3/ExD

Třikrát rychlejší než slovo

Three-times faster than-the-word

Line 3 here corresponds to the following dependency tree:

To avoid duplicated work, a class is provided to you that

will read the dependency structure for you, providing direct

access to each word’s head and children (if any), along with

the labels of these edges. Example usage can be found in

scripts/inflect-tree. For a list of analytical functions

(the edge labels),

see this document.

Credits: This assignment was designed by Matt Post for a spring 2014 course in machine translation taught at Johns Hopkins University. The data used in the assignment comes from the Prague Dependency Treebank v2.0