How I got into Medical Robotics Research

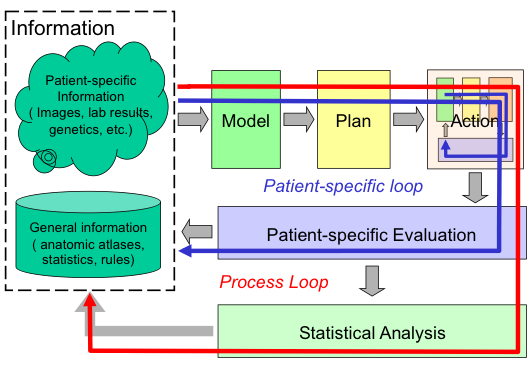

Interventional medicine as a closed-loop process: This basic process of 1) combining specific information about the patient with the physician’s general knowledge to determine the patient’s condition; 2) formulating a plan of action; 3) carrying out this plan; and 4) evaluating the results has existed since ancient times. Traditionally, all these steps have taken place in the physicians head. The ability of modern computer-based technology to assist humans in processing and acting on complex information will profoundly enhance this process in the 21st Century. |

After joining IBM as a Research Staff Member in 1976, I held various research and management positions. By the late 1980's I was a middle manager within the Manufacturing Research Department in Yorktown Heights. We had an active collaboration with two surgeons from the University of California at Davis to explore the feasibility of developing a robotic system to assist in cementless hip surgery. One surgeon (Howard Paul) was a veterinarian and the other (William Bargar) treated humans. I became so interested in the project that I took an internal sabbatical in 1989 to lead a small team to develop a prototype system (later called "Robodoc") that could do surgery on Dr. Paul's veterinary patients. I began working with Dr. Court Cutting, of NYU Medical Center to help develop an integrated surgical planning and navigation system for craniofacial osteotomies. Following this sabbatical year, I established and led the Computer-Assisted Surgery (CAS) Group at within the Computer Science Department, where research activities included continued development of Robodoc and navigation systems, as well developing as the LARS system for minimally-invasive surgery and pursuing other work relating to our vision of computer-integrated interventional medicine. I continued to lead the Computer-Assisted Surgery Group until moving to Johns Hopkins in 1995.

A good overview of the activities of the Computer-Assisted Surgery Group may be found in

- R. H. Taylor, J. Funda, L. Joskowicz, A. Kalvin, S. Gomory, A. Gueziec, and L. Brown, "An Overview of Computer-Integrated Surgery Research at the IBM T. J. Watson Research Center", IBM Journal of Research and Development, March, 1996.

After moving to Johns Hopkins in 1995, I continued to collaborate with IBM and the startup company created to commercialize Robodoc.

Further information about some of these activities, together with some citations to relevant papers, is in the sections below. Also in this period, I co-edited a book and co-organized an NSF workshop on this emerging area:

- R. H. Taylor, S. Lavallee, G. C. Burdea, and R. Mosges (ed), Computer-Integrated Surgery, Technology and Clinical Applications, The MIT Press, 1995.

- R. H. Taylor, G. B. Bekey, and J. Funda, Proc.of NSF Workshop on Computer Assisted Surgery, Washington D.C.1993.

Overall, this IBM work and subsequent JHU-IBM collaborations led to

13 journal articles and 29 refereed conference papers. Some selected

examples are mentioned below.

Robotic Hip Surgery (Robodoc)

I was the principal architect for and led the implementation of a prototype robotic system (later called “Robodoc”) that was designed to improve the accuracy of bone preparation in hip and knee replacement surgery. Robodoc was the first robotic assistant to be used in a major surgical procedure involving significant tissue removal. Key innovations in this work included the use of CT images to plan the procedure, co-registration of the preoperative plan and robot to the patient in the operating room, and control of the robot to machine the desired shape accurately and safely. One novel aspect of the IBM prototype was the use of an optical tracking system to provide redundant tracking of the robot’s cutter, together with other redundant safety measures. Although now routine in medical robotics, each of these steps required significant innovation at the time.

After the system was transferred to Integrated Surgical Systems (now Think Surgical) for clinical development, I led efforts both at IBM and Johns Hopkins to improve the system and develop extensions to enable it to do “revision” surgery to replace failing hip implants. These efforts included novel x-ray based methods to accurately register the robot to the patient’s bones, and novel cutting strategies for revision surgery, and novel planning methods for custom implants.

The IBM system was first used on veterinary patients (dogs needing hip replacements) in 1990, and the clinical version developed by Integrated Surgical Systems was first used on human patients in 1992. This system was deployed commercially in Europe starting in 1994 and currently has both FDA and CE-mark approval. Over 32,000 hip and knee procedures have been performed with the system. Similarly, crucial features of the Robodoc system were adopted by other early medical robots for orthopaedics, such as the Caspar system that was marketed in Europe to compete with Robodoc, and are found in many surgical robots today used in a variety of surgical applications.

Selected publications from the IBM phase of this work:

- R. H. Taylor, H. A. Paul, C. B. Cutting, B. Mittelstadt, W. Hanson, P. Kazanzides, B. Musits, Y.-Y. Kim, A. Kalvin, B. Haddad, D. Khoramabadi, and D. Larose, "Augmentation of Human Precision in Computer-Integrated Surgery", Innovation et Technologie en Biologie et Medicine, vol. 13- 4 (special issue on computer assisted surgery), pp. 450-459, 1992.

- R. H. Taylor, H. A. Paul, P. Kazanzides, B. D. Mittelstadt, W. Hanson, J. F. Zuhars, B. Williamson, B. L. Musits, E. Glassman, and W. L. Bargar, "An Image-directed Robotic System for Precise Orthopaedic Surgery", IEEE Transactions on Robotics and Automation, vol. 10- 3, pp. 261-275, 1994.

- L. Joskowicz and R. H. Taylor, "Interference-Free Insertion of a Solid Body into a Cavity: An Algorithm and a Medical Application", Int. Journal of Robotics Research, vol. 15- 3 (June), 1996.

Selected publications from follow-on collaborations between IBM and JHU

- R. H. Taylor, L. Joskowicz, B. Williamson, A. Gueziec, A. Kalvin, P. Kazanzides, R. VanVorhis, J. H. Yao, R. Kumar, A. Bzostek, A. Sahay, M. Borner, and A. Lahmer, "Computer-Integrated Revision Total Hip Replacement Surgery: Concept and Preliminary Results", Medical Image Analysis, vol. 3- 3, pp. 301-319, 1999.

- J. Yao, R. H. Taylor, R. P. Goldberg, R. Kumar, A. Bzostek, R. V. Vorhis, P. Kazanzides, and A. Gueziec, "A C-Arm Fluoroscopy-Guided Progressive Cut Refinement Strategy Using a Surgical Robot", J. Computer Assisted Surgery, vol. 5- 6, pp. 373-390, 2000. PMID: 11295851

- A. Gueziec, P. Kazanzides, B. Williamson, and R. Taylor, "Anatomy-based Registration of CT-Scan and Intraoperative X-ray images for Guiding a Surgical Robot", IEEE Transactions on Medical Imaging, vol. 17- 5, pp. 715-728, 1998. PMID: 9874295

A Planning and Navigation System for Craniofacial Surgery

Concurrently with my work on Robodoc, I collaborated with Dr. Court Cutting (NYU Medical Center) to develop a system to assist surgeons in performing craniofacial surgery. This system included: 1) a planning system based on CT images and a statistical model of normal anatomy to determine how the bones of a patient’s face should be cut apart and relocated to give a more normal appearance; 2) a optical surgical “navigation” system to track the relative position of the bone fragments in the operating room; and 3) a passive manipulation aid and computer graphics to help the surgeon achieve the desired alignment. This was one of the first uses of surgical navigation for non-neurosurgical applications.

Selected publications from this work

- R. H. Taylor, H. A. Paul, C. B. Cutting, B. Mittelstadt, W. Hanson, P. Kazanzides, B. Musits, Y.-Y. Kim, A. Kalvin, B. Haddad, D. Khoramabadi, and D. Larose, "Augmentation of Human Precision in Computer-Integrated Surgery", Innovation et Technologie en Biologie et Medicine, vol. 13- 4 (special issue on computer assisted surgery), pp. 450-459, 1992.

- R. H. Taylor, C. B. Cutting, Y. Kim, A. D. Kalvin, D. L. Larose, B. Haddad, D. Khoramabadi, M. Noz, R. Olyha, N. Bruun, and D. Grimm, "A Model-Based Optimal Planning and Execution System with Active Sensing and Passive Manipulation for Augmentation of Human Precision in Computer-Integrated Surgery", in Second Int. Symposium on Experimental Robotics, Toulouse, France, June 25-27, 1991. URL: https://link.springer.com/chapter/10.1007/BFb0036139

- C. Cutting, R. Taylor, D. Khorramabadi, and B. Haddad, "Optical Tracking of Bone Fragments During Craniofacial Surgery", in Proc. 2nd Int. Symp. on Medical Robotics and Computer Assisted Surgery, Baltimore, Md., Nov 4-7, 1995, pp. 221-225.

- C. B. Cutting, F. L. Bookstein, and R. H. Taylor, "Applications of Simulation, Morphometrics and Robotics in Craniofacial Surgery". in Computer-Integrated Surgery, R. H. Taylor, S. Lavallee, G. Burdea, and R. Mosges, Eds. Cambridge, Mass.: MIT Press, 1996, pp. 641-662.

- A. D. Kalvin and R. H. Taylor, "Superfaces:

Polygonal Mesh Simplification with Bounded Error", IEEE

Computer Graphics and Applications, vol. 16- 3 (May), pp.

64-77, 1996.

The LARS System for Minimally Invasive Surgery

While at IBM, I also developed a novel robotic system (called LARS) as a surgical assistant for minimally-invasive surgery, as part of a collaboration with Johns Hopkins University. Although its primary intended use was for manipulating surgical endoscopes, the system was designed to be precise enough to be used in image-guided manipulation of surgical tools.

The LARS system incorporated many novel elements that now are widely used in surgical robots. Among the most significant innovations was the introduction of a “remote center-of-motion (RCM)” structure to provide a kinematically constrained pivoting motion at the point where the endoscope or tool enters the patient’s body. The RCM provides high dexterity about the entry point while also simplifying other aspects of the system design and simplifying safety implementation.

The Remote-Center-of-Motion (RCM) mechanism introduced in the LARS system, together with variants, has become ubiquitous in medical robot systems, both in academia and in commercial applications, ranging from the DaVinci surgical robots (over 4200 installed worldwide) to smaller clinically applied systems (such as separate ophthalmology robots developed by KU Leuven and by Preceyes, Inc.), and to many research systems for minimally invasive surgery, image-guided injections, and microsurgery. As another indication of impact, a Google search on the phrase “RCM medical robots” yields over 3400 publications, and my US patent 5,397,323, “Remote center-of-motion robot for surgery” has been cited 491 times in patent and other literature.

The surgeon could control the system through a miniature joystick device adapted from an IBM ThinkPad, voice commands, or hand-over-hand force-compliant guiding. The LARS system introduced a number of other features that have since become commonplace or which are still the subject of active research. Examples include: voice control and information interfaces; video and auditory “sensory substitution” display of information; visual tracking of surgical tools; automated positioning of tools to targets designated by the surgeon; saving and returning the endoscope to designated views; and “virtual fixtures” for enforcing safety barriers and for helping a surgeon position and manipulate surgical tools.

Selected publications from the IBM phase of this work:

- R. H. Taylor, J. Funda, B. Eldridge, K. Gruben, D. LaRose, S. Gomory, M. Talamini, L. R. Kavoussi, and J. Anderson, "A Telerobotic Assistant for Laparoscopic Surgery", IEEE Engineering in Medicine and Biology Magazine, vol. 14-3, pp. 279-287, April-May, 1995.

- J. Funda, K. Gruben, B. Eldridge, S. Gomory, and R. Taylor, "Control and evaluation of a 7-axis surgical robot for laparoscopy", in Proc 1995 IEEE Int. Conf. on Robotics and Automation, Nagoya, Japan, May, 1995.

- B. Eldridge, K. Gruben, D. LaRose, J. Funda, S. Gomory, J.

Karidis, G. McVicker, R. Taylor, and J. Anderson, "A Remote Center

of Motion Robotic Arm for Computer Assisted Surgery", Robotica,

vol. 14- 1 (Jan-Feb), pp. 103-109, 1996.

"Steady Hand" Cooperative Control

The Robodoc and LARS systems both provided a form of hand-over-hand “steady hand” robot control, in which the surgeon and robot both hold the surgical tool and the robot moves to comply to forces exerted by the surgeon on the tool. Because the robot is doing the actual motions, the motion can be very precise and the effects of hand tremor are eliminated, and virtual fixtures are readily incorporated into the constrained optimization framework that I developed for control of the LARS system.

The steady-hand cooperative control paradigm has subsequently been applied to many medical robots both at Johns Hopkins and elsewhere. Product examples include Stryker’s Mako® orthopaedic robots and a novel robot developed at Johns Hopkins for head-and-neck microsurgery that is being commercialized by a startup company, Galen Robotics, and the KU Leuven system mentioned above.

Selected publications from the IBM phase of this work:

- J. Funda, R. Taylor, B. Eldridge, S. Gomory, and K. Gruben, "Constrained

Cartesian motion control for teleoperated surgical robots", IEEE

Transactions on Robotics and Automation, vol. 12- 3, pp.

453-466, 1996.

Publications and Patents from

my IBM Work

Overall, my IBM medical robotics work resulted in 13 journal papers and 29 refereed conference papers. Patents provide another measure of the impact of this work, and my IBM medical robotics work led to 24 issued US patents, which have collected over 7800 citations. Many of these patents were licensed commercially to medical robotics companies, including Integrated Surgical Systems (now Think Surgical) and Intuitive Surgical Systems (manufacturers of the DaVinci robots).

The claims in these medical robotics patents cover many fundamental concepts and techniques in medical robotics. For example, the patent family titled "Image-Directed Robotic System for Robotic Surgery Including Redundant Consistency Checking" (US 5,086,401, 5,299,288, and 5,408,409) cover orthopaedic surgery robots similar to Robodoc. For example, Claim 10 of US 5,408,409 describes a system for planning the placement of an implant shape relative to cross-sectional images of patient anatomy and using a robot to cut the shape. Other claims in the patent family cover a redundant safety checking using a variety of sensing means, monitoring tool-tissue interaction forces, providing feedback to the surgeon during the procedure, and other key elements of any robotic system intended for orthopaedic use. Other patents related to the Robodoc work cover methods for 2D-3D registration between CT and X-ray images and for controlling the insertion path of implants into the prepared bone cavity.

In addition to the RCM patent discussed earlier, the patent families associated with the LARS robot and the craniofacial surgery system cover many aspects of minimally invasive surgery, human machine interfaces, and surgical navigation. The family titled "System and method for augmentation of surgery," (6,024,695, US 5,402,801, 5,630,431, 6,231,526, and 6,547,782), originally filed on June 13, 1991, includes many claims covering human-machine interaction in surgical robotics, including voice interfaces, haptic, auditory, and visual feedback, moving a tool to a target designated in a video image, interactive monitoring and modification of surgical plans, virtual fixtures for limiting motion of an instrument or guiding it on a path, and systems combining passive manipulation aids with navigational tracking devices. Other patents coming from the same June, 1991 disclosure contained other concepts that have become important in the field. For example, US Patent 5,445,166, “System for Advising a Surgeon”, claims systems and methods for providing real time interactive feedback to a surgeon comparing a surgical plan to sensed surgical execution, together with ways for interactive modification of the surgical plan.

The patent family titled “System and method for

augmentation of endoscopic surgery” (US 5,417,210, 5,749,362,

6,201,984 B1, 7,447,537 B1, and 8,123,675 B2), originally filed May

27, 1992, likewise describes numerous surgeon interfaces including the

use of computer vision to define anatomic features and to control tool

motion; virtual fixtures for maintaining constant tool-to-anatomy

distance constraints; and voice command and information interfaces).